In this era of optical fiber and the widespread availability of 5G since 2021, along with nearly ubiquitous 4G coverage, customers have become accustomed to accessing everything instantly upon request. If this isn’t the case, they will likely leave and find an alternative product promptly, as the internet typically offers multiple companies providing similar services. Performance is a crucial factor in business.

When building a system, it’s essential to prioritize efficiency, reliability, and high availability. Assuming the software is well-built, another significant challenge lies in the infrastructure. You can have the most beautiful house in the world, but if reaching it takes two days, even the best real estate won’t be able to sell it. Infrastructure plays a pivotal role in a system. When addressing improvements in infrastructure during system development, load balancing is a key aspect we’ll explore in this article.

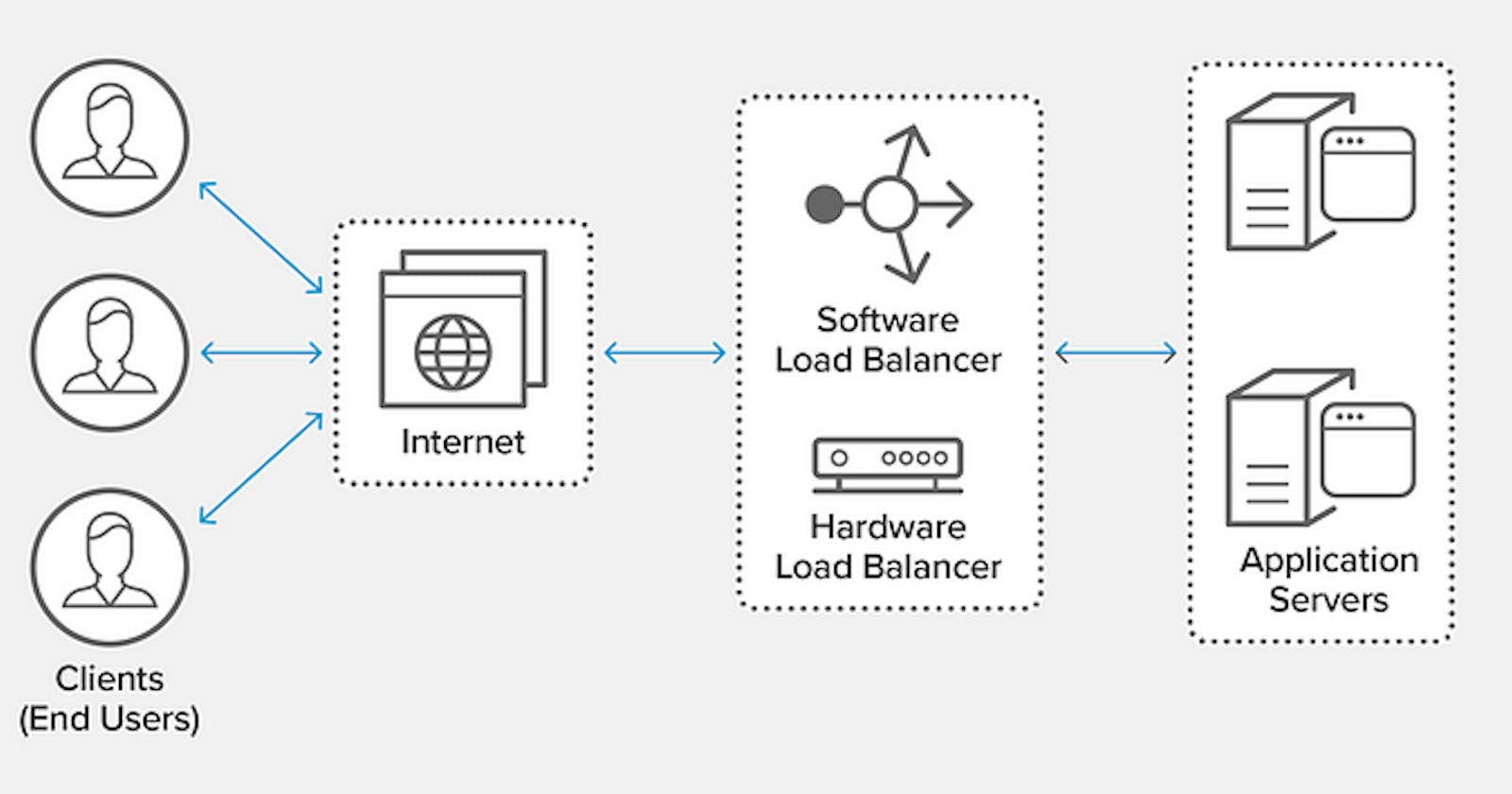

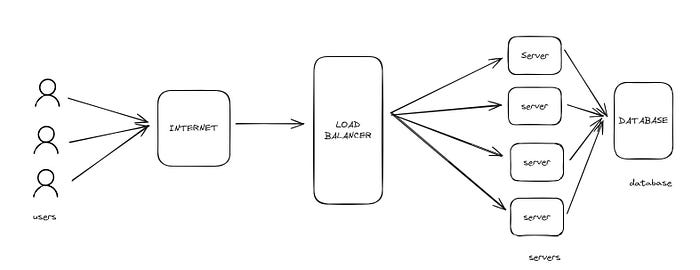

Load balancing is a technique used to distribute incoming network traffic or workload across multiple servers or applications. The primary goal is to ensure no single server bears too much load, preventing performance issues and optimizing resource utilization.

We think about it when :

You have multiple servers or resources that can handle a particular task, such as serving a website or processing requests,

You want to enhance performance, ensure high availability,

You want to prevent any single point of failure.

When we face performance problems, we can think about vertical or horizontal scaling.

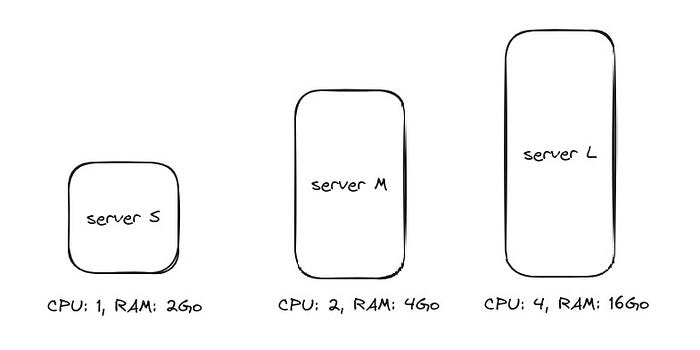

Vertical scaling involves increasing the capacity of a single server by adding more resources, such as CPU, RAM, or storage. While it has its merits, there are some issues associated with vertical scaling:

Cost: Upgrading hardware components can be expensive, especially for high-end servers,

Limited Scalability: There’s a limit to how much a single server can be scaled vertically. Eventually, you might reach the maximum capacity of available hardware,

Single Point of Failure: If the single, scaled-up server experiences a failure, it can lead to downtime for the entire application or service,

Downtime for Upgrades: Scaling vertically often requires downtime for hardware upgrades, impacting the availability of the system,

Complexity: Managing a large, vertically scaled server can become complex, affecting maintenance and troubleshooting.

These limitations have led to the adoption of horizontal scaling, achieved through techniques like load balancing and distributing workloads across multiple servers.

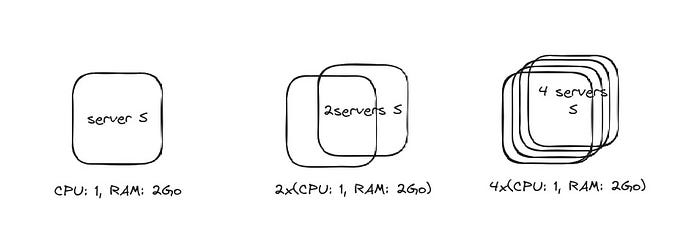

Horizontal scaling involves adding more machines or nodes to a system to handle increased load. Here are some advantages of horizontal scaling:

Cost-Effective: Adding smaller, commodity hardware is often more cost-effective than investing in high-end, powerful servers,

Improved Scalability: Horizontal scaling allows for almost linear scalability, making it easier to accommodate growing workloads by adding more nodes,

Enhanced Redundancy: Multiple nodes provide redundancy, reducing the risk of a single point of failure. If one node fails, others can still handle the workload,

Easier Maintenance: It’s often easier to perform maintenance on one node at a time without affecting the overall system’s availability,

Flexibility and Agility: New nodes can be added or removed dynamically to adapt to changing demands, providing flexibility and agility in managing resources,

Geographic Distribution: Horizontal scaling facilitates distributing nodes across different geographical locations, improving performance and resilience,

Scalable Storage: Scaling horizontally can also be applied to storage solutions, allowing for the efficient handling of large amounts of data.

Overall, horizontal scaling aligns well with the principles of modern, distributed computing and is widely adopted for building scalable and resilient systems.

Load balancing is beneficial for optimizing performance, ensuring high availability, and managing resources efficiently in a computing environment. It distributes incoming traffic across multiple servers, preventing overload on a single server, enhancing scalability, and providing fault tolerance. This approach promotes optimal resource utilization, improves system reliability, and simplifies centralized management.

In the next article, I’ll provide an implementation of an infrastructure using nginx as a load balancer.

Let’s connect :